Deep Reinforcement Learning for Beginners: Video Tutorial Series

Introduction to Reinforcement Learning

Reinforcement Learning (RL) is an area of machine learning concerned with how intelligent agents ought to take actions in an environment in order to maximize the notion of cumulative reward.

Key Concepts in Reinforcement Learning

Before diving into deep reinforcement learning, it's important to understand the foundational concepts like states, actions, policies, rewards, and value functions.

import gymnasium as gym

import numpy as np

# Create an environment

env = gym.make("CartPole-v1")

# Reset the environment

state = env.reset()

# Take a random action

action = env.action_space.sample()

next_state, reward, done, info, _ = env.step(action)

print(f"State: {state}")

print(f"Action: {action}")

print(f"Reward: {reward}")

print(f"Done: {done}")

Deep Q-Networks (DQN)

In this section, we'll examine how deep neural networks can be used to approximate Q-values in reinforcement learning problems with large state spaces.

What are Deep Q-Networks?

Deep Q-Networks combine Q-learning with deep neural networks to handle high-dimensional state spaces. Instead of maintaining a table of Q-values, we use a neural network to approximate the Q-function.

import torch

import torch.nn as nn

import torch.optim as optim

class DQN(nn.Module):

def __init__(self, input_dim, output_dim):

super(DQN, self).__init__()

self.network = nn.Sequential(

nn.Linear(input_dim, 128),

nn.ReLU(),

nn.Linear(128, 128),

nn.ReLU(),

nn.Linear(128, output_dim)

)

def forward(self, x):

return self.network(x)

# Example instantiation

state_dim = 4 # CartPole has 4 state dimensions

action_dim = 2 # CartPole has 2 possible actions

model = DQN(state_dim, action_dim)

optimizer = optim.Adam(model.parameters(), lr=0.001)

Experience Replay

One of the key innovations in DQN is experience replay, which helps break correlations between consecutive samples:

class ReplayBuffer:

def __init__(self, capacity):

self.capacity = capacity

self.buffer = []

self.position = 0

def push(self, state, action, reward, next_state, done):

if len(self.buffer) < self.capacity:

self.buffer.append(None)

self.buffer[self.position] = (state, action, reward, next_state, done)

self.position = (self.position + 1) % self.capacity

def sample(self, batch_size):

batch = random.sample(self.buffer, batch_size)

state, action, reward, next_state, done = map(np.stack, zip(*batch))

return state, action, reward, next_state, done

def __len__(self):

return len(self.buffer)

Policy Gradient Methods

While DQN focuses on learning value functions, policy gradient methods directly optimize the policy.

Related Posts

More content from the Reinforcement Learning category and similar topics

Learn the fundamentals of Functional Data Analysis and how to implement key techniques using R.

Shared topics:

A simple explaination of projectiong points onto a hyperspace.

Shared topics:

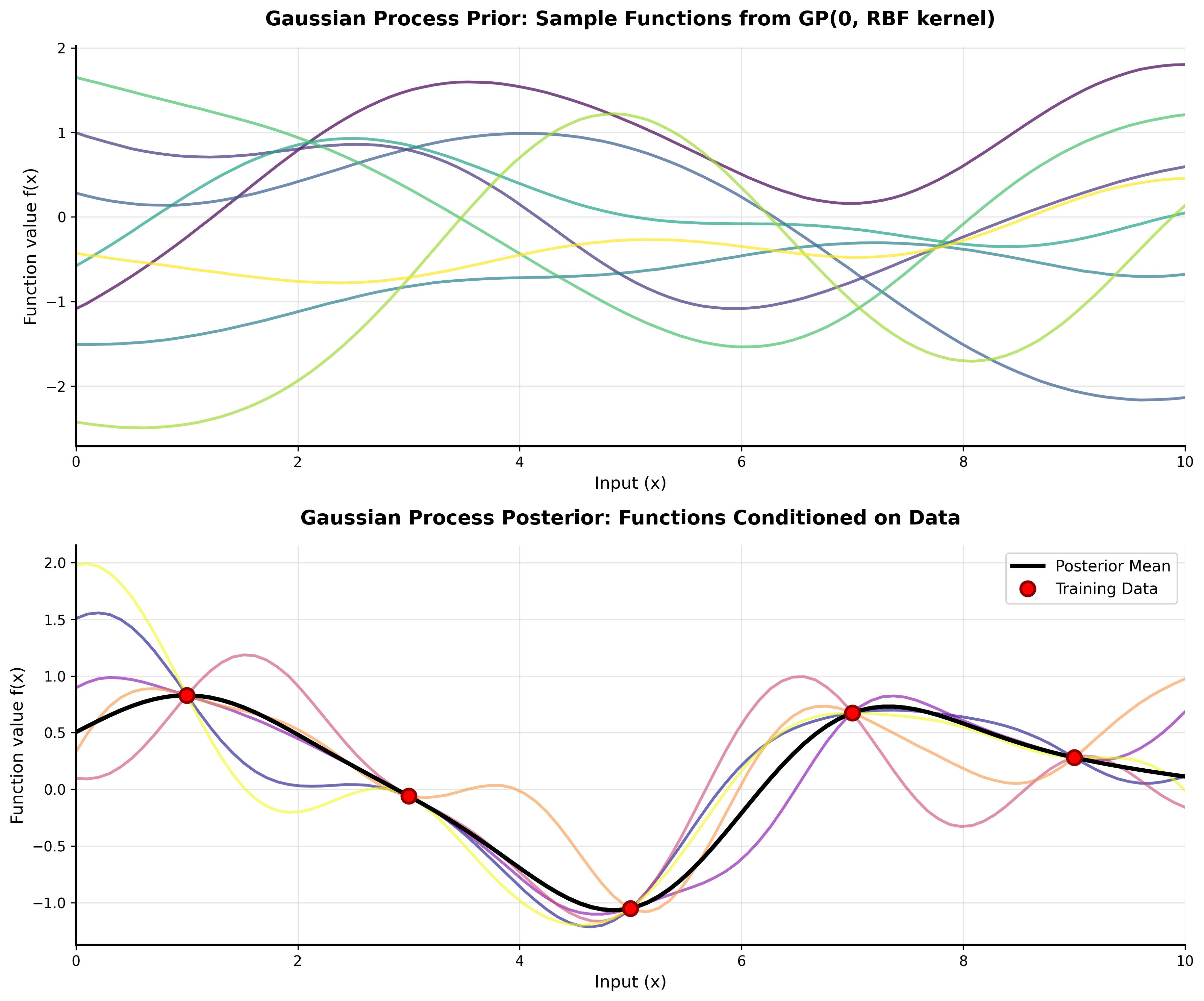

A comprehensive tutorial on understanding Gaussian Processes with interactive visualizations and practical examples.

Shared topics: